How-to Guide: Compiling and Installing the FFmpeg Suite and Audio Video Codecs from Source on the Raspberry Pi

The goals of the following guide are two-fold: Firstly, to install a software package called FFmpeg, which contains numerous tools to facilitate the recording and manipulation of audio-video materials, along with several optional packages known as codecs.

Secondly, I aim not only to present a series of steps and commands, but also to provide a little illumination into the process, providing an overview of some of the key tools and concepts behind obtaining, building, and installing software on a Linux platform.

For those eager to get up and running as quickly as possible, please see the related page: Compiling FFmpeg and Codecs from Source Code: All-in-One Script

- A Little Background Information

- Linux Software Installation

- FFmpeg Compilation and Installation

- Compiling Shared Libraries

- Compiling From Source: The Make Command

- A Note Before Proceeding

- Installing Prerequisite Build Tools

- Installing the YASM Assembler

- Compiling and Installing FFmpeg Codecs

- The FFmpeg Suite

- Compiling and Installing FFmpeg

- All-in-One FFmpeg Build and Installation Script

- Using FFmpeg to Transcode Files: Examples

- Related Posts

- External Links

Please see the my earlier post: What is RetroPie? for a little background on both RetroPie and RetroArch.

My primary motivation for installing FFmpeg was to be able to capture real-time footage of gameplay from various console systems available in the RetroPie emulator suite, a number of which utilise the RetroArch framework that provides a facility to make live audio-video recordings.

Whilst at the time of writing this facility is not enabled, it is possible to modify RetroArch, rebuilding it to allow recording to be switched on. I detail the steps required to enable and configure this feature in my guide: Recording Live Gameplay in RetroPie’s RetroArch Emulators Natively on the Raspberry Pi

As defined by the project’s Wikipedia page, FFmpeg is a free software project that produces libraries and programs for handling multimedia data.

FFmpeg includes libavcodec, an audio/video codec library… libavformat, an audio/video container mux and demux library, and the ffmpeg command line program for transcoding multimedia files.

A key component, FFmpeg, is required for in-game recording to work; unfortunately Raspbian, the operating system based on Debian Linux which underpins RetroPie, does not come with this package pre-installed; this limitation can be overcome, albeit with a little effort.

For those users coming from a Windows background, installing software on the Raspberry Pi can seem a little complicated, certainly in comparison to simply downloading and double-clicking a file.

Software installation on the Pi can, however, be very straightforward, thanks to the Advanced Packing Tool (APT) provided by the Raspbian Linux operating system. Rather than downloading a file in a browser, with APT the command line is used to request a package from a repository, a catalogue of software known to be compatible with the specific installation of Raspbian being used.

For details on using the APT, please see my post: Don’t Fear The Command Line: Raspbian Linux Shell Commands and Tools – Part 1

Not all compatible software is available in the repository, and, as is conventional in the Linux world, often packages need to be compiled from raw source code. This is the case with several of the FFmpeg codecs, and indeed the FFmpeg suite itself.

The following does not necessarily represent the most optimal way to achieve the aim of obtaining and installing a custom version of FFmpeg, as with all things Linux there are many different approaches to reach a goal.

I’d like to note that the foundation of this post was the Ubuntu FFmpeg Compilation Guide, which I have expanded and adapted for specific compatibility with the Raspberry Pi in general, and the RetroArch emulator core in particular.

FFmpeg can utilise a vast array of codecs; when building the suite from source code we are free we are free to include or exclude these at will.

Some components required by FFmpeg are available from Raspbian’s APT repository, whilst others, especially codecs, must be built from source code. Of the components available via APT, these are not necessarily the latest versions, as is the case with the x264 codec, in which case we may choose to build from source.

When compiling FFmpeg it is necessary to have installed all required codec components prior to the building and installation of FFmpeg itself. For compatibility with RetroArch the codecs utilised by FFmpreg must be built as shared, as opposed to static, libraries.

As defined at yolinux.com, there are two Linux C/C++ library types:

Static libraries (.a):

Library of object code which is linked with, and becomes part of the application.

Dynamically linked shared object libraries (.so):

There is only one form of this library but it can be used in two ways.

Dynamically linked at run time but statically aware. The libraries must be available during compile/link phase. The shared objects are not included into the executable component but are tied to the execution.

Dynamically loaded/unloaded and linked during execution (i.e. browser plug-in) using the dynamic linking loader system functions.

The Configure Script

The configure script generates the makefile, which contains commands to actually compile an executable or library file.

As the name suggests, the purpose of the script is to customise the software being built.

When building from source, to generate a shared library the configure script must specify the parameter: --enable-shared

This causes the build process for the component to create shared library file(s), which can be linked to by FFmpeg; conventionally such files have an extension of .so.

Rather than using the configure script paramater --prefix to specify a destination folder for the result of the component build, we must remove this to cause each component we build to be output to the default directory of /usr/local.

This prevents difficulties later with ffmpeg, and other software, being unable to locate the library files; whilst this issue can be overcome by setting search paths, this requires additional effort and introduces the risk of incorrect configuration.

Thus, rather than a configure command appearing like this…

./configure --prefix="$HOME/ffmpeg_build" --enable-shared

…it should appear like this:

./configure --enable-shared

The make command triggers the compilation of source code into executable files or libraries by executing a makefile script which has been built by the configure script.

On a multicore Raspberry Pi (model 2 and 3 Pis have four cores) the build process can be greatly accelerated by utilising more than a single core. The -j parameter specifies how many cores to use. For example, to use three cores:

sudo make -j3

Note: on a Raspberry Pi 3, running multiple cores at 100 % CPU greatly increases the temperature of the system, leading to ‘thermal regulation’ which reduces the clock speed of the CPU, defeating the gains of using multiple cores. When building software such as FFmpeg, which takes approximately 35 minutes on an overclocked Pi 3, I use:

make -j2

Using two cores balances the speed and temperature to allow the CPU to run at full overlcock speed (1350mhz on my system) whilst staying below 80 degrees Celsius (the default cut-off point at which thermal regulation forces the CPU to underclock; often the CPU reduces to 600mhz – half the 1.2ghz default speed)

For more information regarding overclocking and underclocking, please see my previous post entitled Overclocking the Raspberry Pi 3: Thermal Limits and Optimising for Single vs Multicore Performance.

I strongly advise that a full backup be made of the system, as there is no simple method to reverse the actions of the compilation and installation processes described below.

A full image of the Raspberry Pi’s SD Card can be made using a tool such as Win32DiskImager.

Is a package available in the APT cache, and if so, which version?

apt-cache show packagename

Where are the components of a package installed to?

dpkg -L packagename

Firstly, update the APT repository information to ensure the system can retrieve the latest version of components:

sudo apt-get update

Set up working directories to be used during the installation and build process:

cd ~ mkdir ~/ffmpeg_sources mkdir ~/ffmpeg_build

Install various tools and packages, including audio-video codecs, required for building FFmpeg:

sudo apt-get -y install autoconf automake build-essential libass-dev libfreetype6-dev \ libsdl1.2-dev libtheora-dev libtool libva-dev libvdpau-dev libvorbis-dev libxcb1-dev libxcb-shm0-dev \ libxcb-xfixes0-dev pkg-config texinfo zlib1g-dev

For a description of each package contained in the above command, please see the supplementary information page.

Description from APT: Modular assembler with multiple syntax support

Yasm is a complete rewrite of the NASM assembler. It supports multiple assembler syntaxes (eg, NASM, GAS, TASM, etc.) in addition to multiple output object formats (binary objects, COFF, Win32, ELF32, ELF64) and even multiple instruction sets (including AMD64). It also has an optimiser module.

sudo apt-get install yasm

Minimum version required: 1.2.0

Version installed at time of writing: 1.2.0-2

The

--host parameter allows the compiler to be tuned to output code for a specific processor. This code specifies a generic Arm processor and Linux operating system:--host=arm-unknown-linux-gnueabiThe following is a more specific value, but I have only seen this referenced in cross-compiler guides, rather than those for local Raspberry Pi use.

--host=arm-bcm2708hardfp-linux-gnueabi

Description from APT: video encoder for the H.264/MPEG-4 AVC standard

x264 is an advanced commandline encoder for creating H.264 (MPEG-4 AVC) video streams

The x264 package is available in the APT repository, however it is not the latest version (which contains experimental support for Raspberry Pi x264 hardware encoding). To benefit from the latest features, I chose to build x264 from source.

If you require libx264 for your FFmpeg build, the following parameter must be passed to the the FFmpeg configure script (see the FFmpeg build section, below):

--enable-libx264

To utilise the latest x264 library, obtain and build the source code, and install x264 by issuing the following commands at the console or a terminal window:

cd /home/pi/ffmpeg_sources git clone git://git.videolan.org/x264 cd x264 ./configure --host=arm-unknown-linux-gnueabi --enable-shared --disable-opencl sudo make -j2 sudo make install sudo make clean sudo make distclean

Description from Wikipedia:

Fraunhofer FDK AAC is an open-source software library for encoding and decoding Advanced Audio Coding (AAC) format audio, developed by Fraunhofer IIS… It supports several Audio Object Types including MPEG-2 and MPEG-4 AAC LC, HE-AAC (AAC LC + SBR), HE-AACv2 (LC + SBR + PS) and AAC-LD (low delay) for real-time communication

If you require libfdk-aac for your FFmpeg build, the following parameter must be passed to the the FFmpeg configure script (see the FFmpeg build section, below):

--enable-libfdk-aac

To obtain, compile, and install libfdk-aac, issue the following commands from the console or a terminal session:

cd ~/ffmpeg_sources wget -O fdk-aac.tar.gz https://github.com/mstorsjo/fdk-aac/tarball/master tar xzvf fdk-aac.tar.gz cd mstorsjo-fdk-aac* autoreconf -fiv ./configure --enable-shared sudo make -j2 sudo make install sudo make clean sudo make distclean

Description from APT: MP3 encoding library (development)

The current working directory does not matter when installing via

apt-get, as the target directories for the files within a package are determined by the package itself

LAME (LAME Ain't an MP3 Encoder) is a research project for learning about and improving MP3 encoding technology. LAME includes an MP3 encoding library, a simple frontend application, and other tools for sound analysis, as well as convenience tools.

MP3 audio encoder.

If you require libmp3lame for your FFmpeg build, the following parameter must be passed to the the FFmpeg configure script (see the FFmpeg build section, below):

--enable-libmp3lame

To obtain, compile, and install libmp3lame, issue the following command from the console or a terminal session:

sudo apt-get install libmp3lame-dev

Version required: 3.98.3 or later

Version installed: 3.99.5+repack1-7+deb8u1

Description from APT: Opus codec library development files

The Opus codec is designed for interactive speech and audio transmission over the Internet. It is designed by the IETF Codec Working Group and incorporates technology from Skype's SILK codec and Xiph.Org's CELT codec.

Opus audio decoder and encoder.

If you require libopus for your FFmpeg build, the following parameter must be passed to the the FFmpeg configure script (see the FFmpeg build section, below):

--enable-libvorbis

To obtain, compile, and install libopus, issue the following command from the console or a terminal session:

sudo apt-get install libopus-dev

Version required: 1.1 or later

Version installed: 1.1-2

The wget command requests a specific version of libvpx (1.5.0) - at time of writing I am unsure if newer versions will work as well with FFmpeg

configure reports:

--enable-shared is only supported on ELF; assuming this is OK - it is.

Description from APT: VP8 and VP9 video codec (development files)

VP8 and VP9 are open video codecs, originally developed by On2 and released as open source by Google Inc. They are the successor of the VP3 codec, on which the Theora codec was based.

If you require libvpx for your FFmpeg build, the following parameter must be passed to the the FFmpeg configure script (see the FFmpeg build section, below):

--enable-libvpx

To obtain, compile, and install libvpx, issue the following commands from the console or a terminal session:

cd ~/ffmpeg_sources wget http://storage.googleapis.com/downloads.webmproject.org/releases/webm/libvpx-1.5.0.tar.bz2 tar xjvf libvpx-1.5.0.tar.bz2 cd libvpx-1.5.0 PATH="$HOME/bin:$PATH" ./configure --enable-shared --disable-examples --disable-unit-tests PATH="$HOME/bin:$PATH" make -j2 sudo make install sudo make clean sudo make distclean

PATH="$HOME/bin:$PATH"

PATH is a system variable which can be modified to allow the system to locate required shared libraries, executable files, etc, during the compilation process in arbitrary locations.

/home/pi/bin did not exist on my machine at the time the above code block was run. All of the files required to build FFmpeg have been installed in directories within the default /usr/ path

The following code builds FFmpeg from scratch, using the latest source version, and utilising shared libraries for codec components.

The current versions of required codecs and components are used in most instances in a bid to rule out incompatibilities due to mix-and-match versions of components.

Note: the directory /home/pi/bin is not required to exist before running the FFmpeg compilation routine.

As noted in each codec-specific section, above, if a specific codec is required in your build of FFmpeg, the appropriate parameter must be passed to FFmpeg's configure script.

Once completed, FFmpeg installs four executable files within the /usr/local/bin directory:

ffmpeg

a very fast video and audio converter that can also grab from a live audio/video source. It can also convert between arbitrary sample rates and resize video on the fly with a high quality polyphase filter.

ffprobe

gathers information from multimedia streams and prints it in human- and machine-readable fashion. For example it can be used to check the format of the container used by a multimedia stream and the format and type of each media stream contained in it.

ffserver

a streaming server for both audio and video. It supports several live feeds, streaming from files and time shifting on live feeds. You can seek to positions in the past on each live feed, provided you specify a big enough feed storage.

ffplay

a very simple and portable media player using the FFmpeg libraries and the SDL library. It is mostly used as a testbed for the various FFmpeg APIs.

All descriptions are from the FFmpeg project's official documentation.

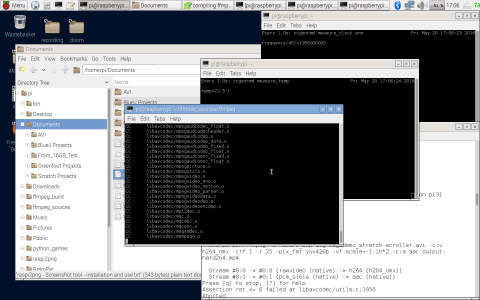

Note: during the compilation process, expect to see a number of warning messages emitted by the compiler - these are normal, and do not indicate failure.

To compile and build FFmpeg, issue the following commands at the console, or in a terminal emulator:

cd ~/ffmpeg_sources wget http://ffmpeg.org/releases/ffmpeg-snapshot.tar.bz2 tar xjvf ffmpeg-snapshot.tar.bz2 cd ffmpeg PATH="$HOME/bin:$PATH" ./configure \ --pkg-config-flags="--static" \ --extra-cflags="-fPIC -I$HOME/ffmpeg_build/include" \ --extra-ldflags="-L$HOME/ffmpeg_build/lib" \ --enable-gpl \ --enable-libass \ --enable-libfdk-aac \ --enable-libfreetype \ --enable-libmp3lame \ --enable-libopus \ --enable-libtheora \ --enable-libvorbis \ --enable-libvpx \ --enable-libx264 \ --enable-nonfree \ --enable-pic \ --extra-ldexeflags=-pie \ --enable-shared

On a Raspberry Pi 3, overclocked to 1350mhz, the

make command for FFmpeg took approximately 35 minutes.

PATH="$HOME/bin:$PATH" make -j2 sudo make install sudo make distclean hash -r

The final, critical step, is to update the links between FFmpeg and the shared codec libraries, by using ldconfig to configure the dynamic linker run-time bindings:

sudo ldconfig

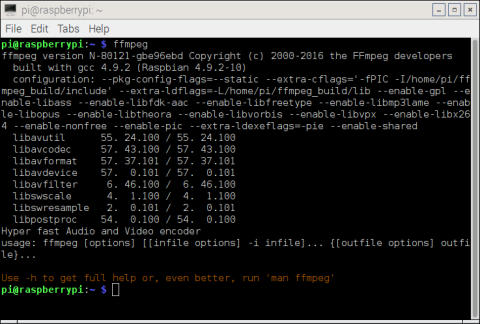

When the ffmpeg command is issued at a terminal a successful build should output information similar to that shown in the following screen:

If the aforementioned step is omitted, when issuing the ffmpeg command an error similar to the following may be experienced:

ffmpeg: error while loading shared libraries: libavdevice.so.57: cannot open shared object file: No such file or directory

On some builds the ffmpeg command may run correctly, but the error may be triggered when a parameter is passed, for example to query the codecs available within the ffmpeg installation:

ffmpeg -codecs

The same solution applies, namely rebuilding the library cache using the ldconfig command.

The above code retrieves the source for the latest FFmpeg release, contained within an archive named

ffmpeg-snapshot.tar.bz2.Should you require a specific version, the ffmpeg.org site maintains an archive of the source code for each release. To retrieve the 2.8.4 version, for example, update the code as follows:

wget http://ffmpeg.org/releases/ffmpeg-2.8.4.tar.bz2

tar xjvf ffmpeg-2.8.4.tar.bz2

# rename version specific ffmpeg folder

mv ffmpeg-2.8.4 ffmpegDetails for each major FFmpeg release can be found here:

http://ffmpeg.org/download.html#releases

A complete all-in-one script of the above code is available here, along with instructions for creating and executing the script file.

The FFmpeg suite facilitates the modification and transcoding (conversion between formats) of audio and/or video files with almost unlimited flexibility, and as such is a huge topic in its own right. The following are some examples to introduce the basic concepts and functionality.

Note: The order of the parameters is significant

Set input and output file names:

The extension of the output file is used to automatically set the format, however this can be explicitly specified:

Parameter: -i

(if the output file is specified as the last item, it does not require a specific parameter)

ffmpeg -i input.mkv output.mp4

Set video and audio format of output file:

Parameters: -c:v, -c:a

ffmpeg -i input.mkv -c:v libx264 -c:a aac output.mp4

Set a specific format for x264 video - planar 4:2:0 (for Twitter and Omxplayer compatibility):

Parameter: -pix_fmt

ffmpeg -i input.mkv -c:v libx264 -pix_fmt yuv420p -c:a aac output.mp4

Set the quality of the video using near-lossless compression:

Parameter: -qp

ffmpeg -i input.mkv-c:v libx264 -qp 1 -c:a aac output.mp4

alternatively, use Constant Rate Factor:

Parameter: -crf

ffmpeg -i input.mkv-c:v libx264 -crf 1 -c:a aac output.mp4

Note: both qp and crf specify a value of 0 for lossless, however this causes corrupt video when played back using the Raspberry Pi's default Omxplayer; the video is however fine when viewed using other software, for example VLC.

Set number of threads to utilise when processing:

Parameter: -threads

ffmpeg -i input.mkv -threads 2 -c:v libx264 -c:a aac output.mp4

Set framerate of output file:

Parameter: -r

ffmpeg -i input.mkv -c:v libx264 -r 30 -c:a aac output.mp4

Set a start position and a duration, both in seconds, to process a portion of the input file:

Parameters: -ss, -t

ffmpeg -ss 11 -t 42 -i input.mkv -threads 2 -c:v libx264 -qp 1 -r 30 -pix_fmt yuv420p -vf scale=-1:ih*2 -c:a aac output.mp4

Set a combination of parameters to output a file suitable for uploading to Twitter:

(4:2:0 Planar x264 video, at 30 fps, with near-lossless compression, scaled to twice the input file resolution, with aac encoded audio)

ffmpeg -i input.mkv -threads 2 -c:v libx264 -qp 1 -r 30 -pix_fmt yuv420p -vf scale=-1:ih*2 -c:a aac output.mp4

A Labour of Love

Retro Resolution is entirely a labour of love. Please consider offering a donation if the information here has helped illuminate, enlighten, or otherwise assisted you!

- Links: Raspberry Pi and Gaming Emulation via RetroPie

- Raspbian Linux Shell Commands and Tools – Part 1: Just the Basics

- Navigating the Raspberry Pi’s File System. Raspbian Linux Shell Commands and Tools – Part 2

- Recording Live Gameplay in RetroPie’s RetroArch Emulators Natively on the Raspberry Pi

- Overclocking and Stability Testing the Raspberry Pi 2: Overclocking in Depth

- Overclocking the Raspberry Pi 3: Thermal Limits and Optimising for Single vs Multicore Performance

- Jeff Thompson: Installing FFmpeg for Raspberry Pi

- FFmpeg.org Ubuntu Compilation Guide

- Compiling FFmpeg with Shared Libraries: Stackoverflow.com

About

Disclaimers

Privacy Policy

Terms and Conditions

© Retro Resolution

So, I followed this guide. And when I get to the part of running ffmpeg to see if it succeeded, I get this message “-bash: ffmpeg: command not found”

LikeLike

Hi, sorry to hear you’re having problems.

Perhaps the easiest approach to diagnosising this may be to use the RetroPie forum; below is a link to a thread where I posted about this guide, and have assisted some forum users with various issues:

https://retropie.org.uk/forum/topic/2394/how-to-guide-recording-live-gameplay-in-retropie-s-retroarch-emulators-natively-on-the-raspberry-pi-and-twitch-streaming

LikeLike

Hi, you can see another tutorial here

http://engineer2you.blogspot.com/2016/10/rasbperry-pi-ffmpeg-install-and-stream.html

LikeLike

Thanks! With the combined info from these resources hopefully somebody can work out how to stream live gameplay from RetroArch emulators in RetroPie.

LikeLike

I’m not going to make an account for a forum that I’ll never use, other than for this one thing. Anyways, I got it working, but N64 and NES are both completely unplayable while recording. I got the newest retropie (4.?) and the newest retroarch (1.3.8?).

LikeLike

I’m glad you have it working, albeit not as well as would be hoped.

Unfortunately neither the NES nor the N64 are amongst the many emulators I’ve tried recording on – the Atari VCS / 2600, Atari 800 (custom retroarch build), SNES, Megadrive, 32X, Spectrum (Fuse), and PlayStation all record at full frame rate with all games I’ve tested.

The retroarch core N64 emulator is notoriously inefficient compared to the standalone Mupen64plus, even without the overhead of recording; it’s a common topic on the forum, where most users optimise for the non-retroarch version (which unfortunately means no ffmpeg features).

The n64 struggles to emulate many titles due to the nature of the GPU’s microcode; there are compatibility lists linked to in posts on the forum (you can browse and search without requiring an account). Super Mario 64 is probably the best performing title on the retroarch core – does recording work with this?

One major factor is the destination for the output files – are you recording to an external USB hard disk? This is a must for the more advanced emulators as writing to the Pi’s internally mounted SD card really affects performance.

The recording approach in the guide was first implemented on RetroPie 3.6, and subsequently tested in 3.7 and 3.8. The most recent version I’ve used it on is 4, release candidate 1.

LikeLike

Hi there, I have successful install FFmpeg for Rasp and streamming it to Local web.

Just for sharing, please visit our blog for tutorial, thanks

http://engineer2you.blogspot.com/2016/10/rasbperry-pi-ffmpeg-install-and-stream.html

LikeLike

Hi,

Thanks for the link. It’d be great to enable streaming using ffmpeg from within RetroArch itself – so far I’ve not had any success…

LikeLike

Hello. Just found this and am currently following the guide. I am on the step of installing fdk-aac and for this step and the previous one installing x264, the command “–enable-libx264” and “–enable-libfdk-aac” were not recognized and therefore ignored. Is this going to hinder the process? anyway I can fix this?

LikeLike

Sadly my site has been long dormant due to serious illness, but if you’re following the guide to achieve recording in retropie, there’s really no need – the current version of retroarch in use by retropie now has this facility built in.

I’d visit the RetroPie website for guidance, but the option is in the retroarch menu now

Best of luck!

LikeLike